Why the Generative AI Renaissance Means Big Legal Headaches

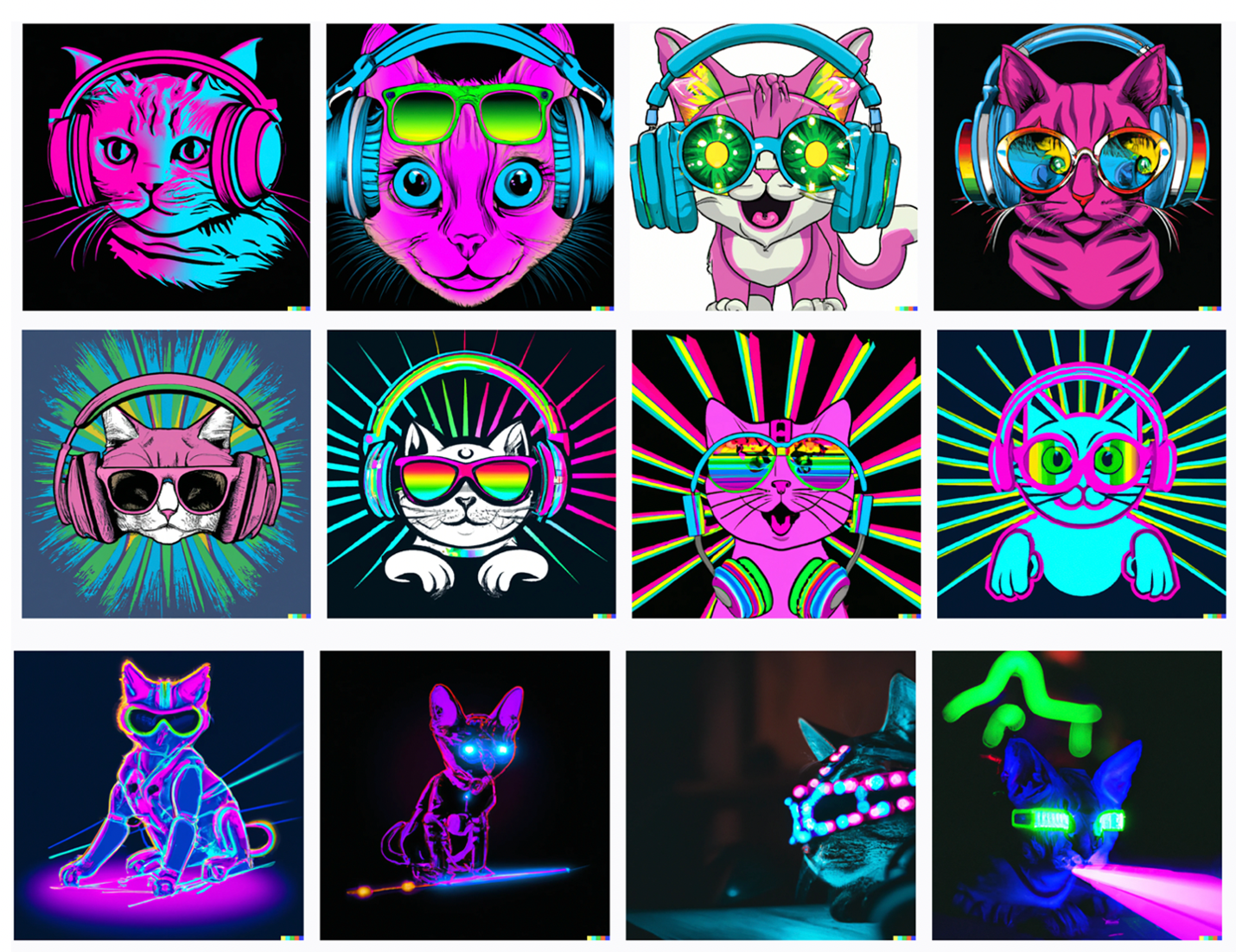

Over the last few months social media feeds have been overrun with at times bizarre DALLE-2 Artificial Intelligence generated images. AI generated versions of us or friends throughout time via and space MyHeritage have swept across Facebook and LinkedIn Alike. And most recently a deluge of AI powered text from ChatGPT has people questioning their own eyes and ears.

This dramatic entrance of next generation AI generated art and language to the mainstream happened faster than any of us could have envisioned. While the tech itself is not all new, what is different is that anyone from your 75 year old aunt to your 5 year old neighbor can use it without the need for any sort of deep technical understanding.

What the mainstreaming of Generative AI means for the practice of law and forensic analysis of evidence. What happens when art and language can be generated by millions of non-data scientists using AI-systems? What happens if these powerful AI and machine learning technologies are deployed by bad actors not bound by the protective terms and services of these mainstream providers like OpenAI?

This article will explain what the heck Generative AI is, how it works, the risks it might pose and what we as legal practitioners and technologists can do to face this brave new AI Generated dataverse.

What the Heck is Generative AI

Generative AI refers to a type of artificial intelligence that can generate new content, such as text, images, audio, or video based on simple human prompts. More recent types of Generative Ai do not require any technical skill to deploy and have widely been adopted and shared across social media.

Some examples of generative AI include:

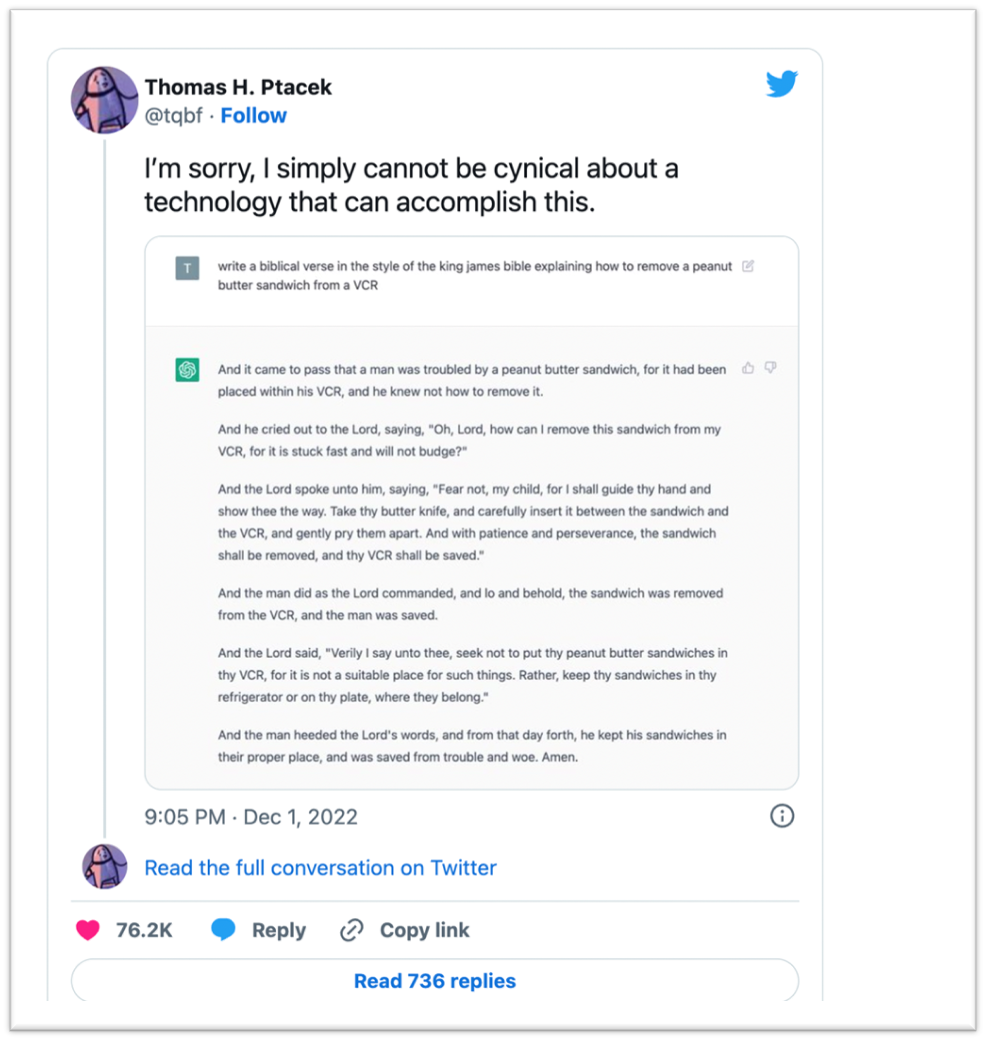

A great poem ChatGPT came up with for Stephen Herrera and Myself on a recent podcast

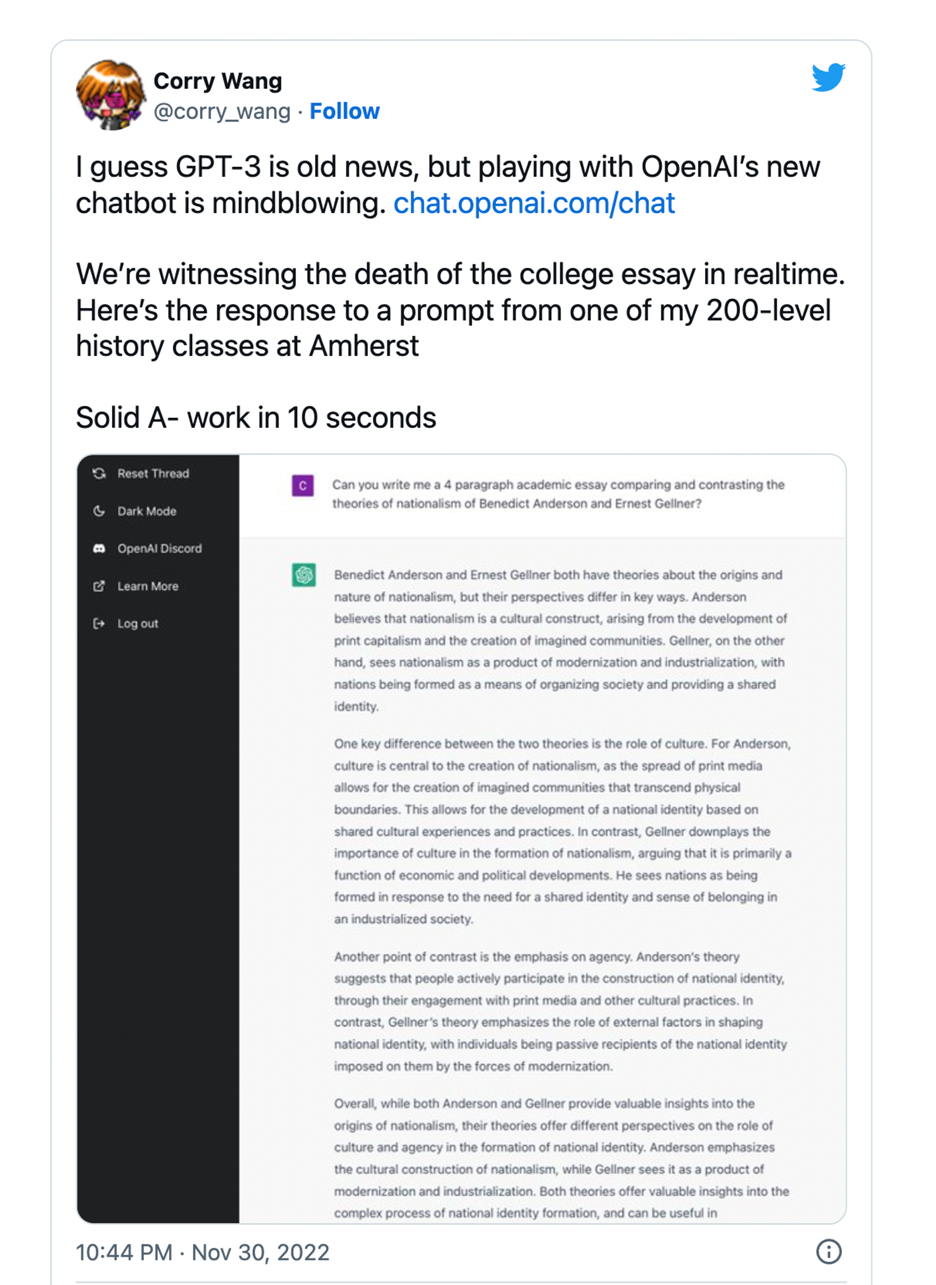

1. Text generation: Generating new sentences or paragraphs based on a given prompt or input text. This can be used for tasks such as summarization, translation, or creating realistic-sounding dialogue for virtual assistants. An Example if ChatGPT powered by GPT3.5 technology.

2. Image generation: Generating new images from scratch, via text-to-image inputs or modifying existing images in creative ways. This can be used for tasks such as creating photorealistic images of objects or scenes that don't exist or adding new elements to an existing image. Common examples are LENSA and DALLE-2.

3. Audio generation: Generating new audio clips, such as music or sound effects, based on a given input or set of instructions. This can be used for tasks such as creating custom soundtracks for videos or games or generating realistic-sounding speech for virtual assistants. There are many companies that are working on audio AI generation. Some examples include OpenAI, Google, and Baidu in the field of AI research, as well as companies like Lyrebird and Descript that offer commercial products for generating audio using AI.

4. Video generation: Generating new videos based on a given prompt or input. This can be used for tasks such as creating personalized video messages or animated videos. Think deep fakes.

Overall, generative AI has the potential to enable the creation of a wide range of new content, from simple text to complex multimedia, and can be applied to many different domains and applications.

How is Generative AI Being Misused

As with every technological advancement, bad actors will find a way to misuses and abuse the tools. Generative Ai is no different. Some of the leading misuses of Generative Ai Include:

- Deepfakes - deepfake videos or audio recordings that are designed to deceive people into thinking they are genuine. Some are silly, like Jerry Seinfeld in Pulp Fiction. Others can have a more sinister use as in the case of a viral deepfake of Ukraine president Zalinski appearing to call for his troops to lay down their weapons.

- Deepfake Advertising: Some organizations are also misappropriating the likeness of celebrities in advertising campaigns using this generative AI.

- Fake News - It can also be used to generate fake news articles or social media posts that are designed to spread misinformation or influence public opinion. There was an entire site dedicated to generating fake news articles and the ramification and misinformation could be widespread. Thankfully that site is no longer up, but the tech remains.

- AI Generated Plagiarism - Some students are moving beyond Wikipedia a source and simply using AI to generate whole term papers. Tech-savvy students are reportedly getting straight As by using advanced language generators — mainly OpenAI's wildly advanced GPT-3, according to Motherboard — to write papers for them. And because the AI Generating this is creating new material standard plagiarism checks cannot detect them.

- Scam Chat Bots: Some chatbots are designed to mimic real people and are used to scam others out of money or personal information. These AI generated chatbots can be programmed to be persuasive and to exploit the trust of their victims.

- Social Influencers: A Generated video, images and chatbots alike can create social media posts that are designed to spread misinformation or influence public opinion. As the tech is more able to mimic human communication, telling the difference will become more challenging.

- AI Generated Scientific Papers - AI can greatly accelerate the time it takes to research and create a scientific paper, but it poses major risks. For example, if the training dataset used to generate the paper is biased or incomplete, the resulting paper may contain false or misleading information. Generative AI could be used to create fake or fraudulent scientific papers, which could harm the credibility of the scientific community.

Generative AI and Deepfakes

Deepfake is a term used to describe the technique of using artificial intelligence and machine learning to manipulate or generate audio or video content in a way that makes it appear as if it has been produced by someone else. This technology can be used to create realistic-looking videos or audio recordings of people saying or doing things that they never actually said or did.

Deepfakes can be created using a variety of techniques, including facial recognition and voice imitation algorithms, and they have the potential to be used for a wide range of nefarious purposes, such as creating fake news or spreading disinformation. While deepfake technology is still in its early stages, it has the potential to be a powerful tool for creating convincing fake content that could be difficult to distinguish from the real thing.

Generative AI models such as GANs (Generative Adversarial Networks) can be used to create deepfake videos. These models work by training on a large dataset of real images and then generating new, synthetic images that are like the ones in the training dataset.

In the case of deepfake videos, the GAN would be trained on real videos and then used to generate fake videos that are difficult to distinguish from the real ones. This technology has the potential to be used for both good and bad purposes, so it's important to be aware of its capabilities and to use it responsibly.

Legal and eDiscovery Headaches

The line between AI Generated and Human generated content both visual and written is blurring. As these tools get more powerful, they become increasingly indistinguishable from human generated content. Could you tell, for example, that the entire last section on Deepfakes was written by ChatGPT and not me? Not even a plagiarism detector could spot anything amiss in the text.

As the line between generative AI and human creations continues to blur a whole host of legal issues arise.

- Copyright: questions around source images in Generative AI data sets

Some artists have raised concerns about generative AI like DALLE learning and mimicking their style based on the presence of their images in the data set the AI algorithm pulls from in generating art. The issue will boil down to “What is the transformative and what is infringing? I think there’s no answer to it right now, but we’re gonna see it heavily litigated,” as Debevoise and Plimpton attorney Megan Bannigan noted in a recent Law.com article.

- Who Owns Generative AI Outputs?

Users of DALLE for example are explicitly granted commercial rights for any images they create on the platform but that does not mean they OWN the images in a traditional sense. “Users get full usage rights to commercialize the images they create with DALL-E, including the right to reprint, sell and merchandise,” OpenAI said.

- Does AI generated Material Qualify for IP or Copyright Protections?

The US Copyright board recently upheld a finding that AI generated artwork could not attain a copyright because “the nexus between the human mind and creative expression” a vital element of copyright. Some Generative AI platforms have gone as far as explicitly revoking user’s claim of any global IP rights within their terms of service:

“By using DreamStudio Beta and the Stable Diffusion, you hereby agree to forfeit all intellectual property rights claims worldwide. Regardless of legal jurisdiction or intellectual property law applicable therein, including forfeiture of any/all copyright claim(s), to the Content you provide or receive through your use of DreamStudio Beta and the Stable Diffusion beta Discord service.”

- Ownership of your own likeness

Celebrities and other high-profile individuals have their likeness contained in several of the Generative AI data sets raising questions about whether this infringes on their personal rights. Some have seen AI Generative Ai tools creating whole advertisement campaigns cashing on their likeness without the consent or even knowledge of the star.

- Danger of Deep Fakes as Evidence

As generative Ai algorithms become more powerful, the potential for increasingly believable deep fakes bleeding into evidence in a case increases dramatically. Luckily There are several ways to determine if a video or audio recording is a deepfake, although it can be difficult to do so with complete certainty.

What Can Legal Professionals do?

As Generative AI becomes more widespread and used with increasingly frequency by the masses, legal professionals can expect to face more cases about the rights and responsibilities around generative AI. As well as more cases where generative AI outputs are part of the universe of potentially relevant information.

This means that not just the amounts of data will continue to expand, but also that the review process itself must consider the need to take extra steps for authenticating data when Generative AI might be involved. The risk of deep fakes as evidence, especially with high profile people involved is not insubstantial and steps must be taken to prevent AI generated material from being held as the truth.

Some eDiscovery workflow best practices in a post Generative AI world include:

- Validate the data sources and whether AI generation could have been involved

- Make full use of the existing authentication process under FRE 901

- Employ experts to validate whether a video, image or text might have been manipulated

- Ironclad chain of custody procedures

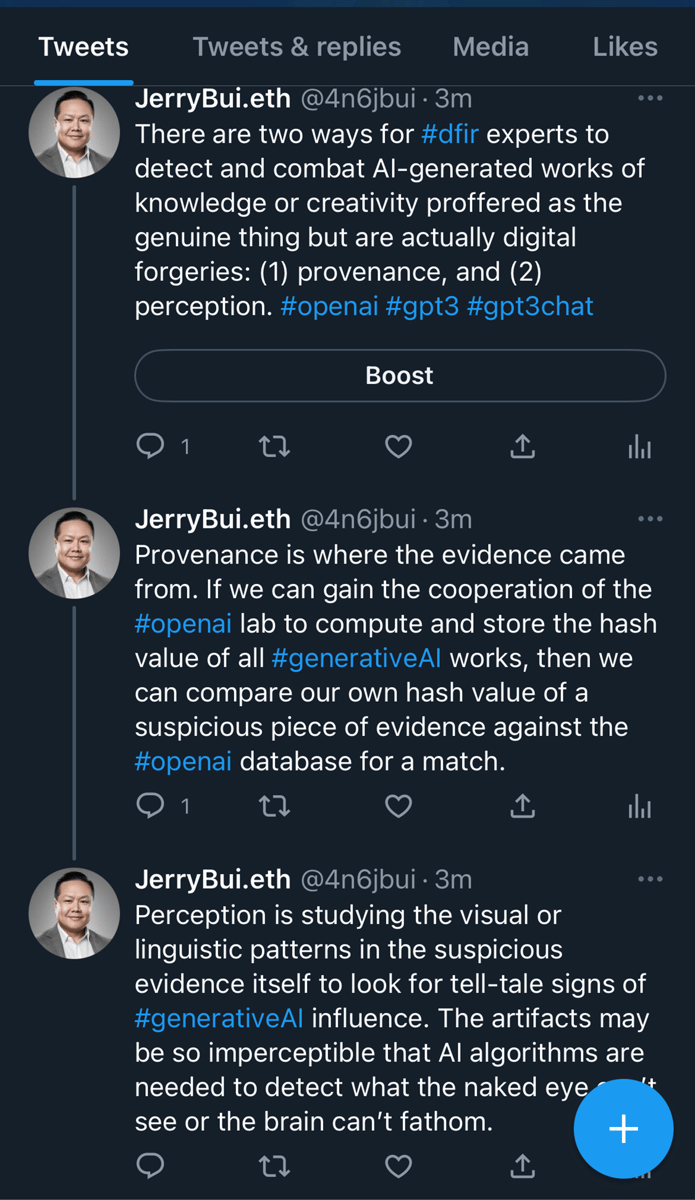

Jerry Bui of FTI broke down the authentication conundrum nicely recently on Twitter. He noted on the authentication and validation of potentially AI Generated material that there are two key factors: 1) provenance, and 2) perception. Simply put, where did the evidence originate and what patterns can you detect within the data that may or may not indicate AI involvement in the creation.

Debunking Deepfakes

One way to detect a deepfake is to look for telltale signs of manipulation, such as unnatural movements or artifacts in the video or audio. Another way is to use specialized software that is designed to analyze the characteristics of a video or audio recording and flag anything that looks suspicious.

Additionally, experts in the field of digital forensics may be able to use a variety of techniques, such as analyzing the metadata associated with a recording or comparing it to other known recordings of the same person, to determine if it is a deepfake.

However, it is important to note that the technology for creating deepfakes is constantly evolving, and it is becoming increasingly difficult to distinguish a deepfake from a genuine recording.

What the Future Holds

While the current hype around specific Generative Ai tools like DALLE and ChatGPT will certainly wane, the longer impact of AI generated content is likely just beginning. According to tech investor Sequoia Capital, “Generative AI is well on the way to becoming not just faster and cheaper, but better in some cases than what humans create by hand”. as such it is not going away any time soon.

We will likely see generative AI bleed into legal issues, evidence and yes, even the practice of law itself. The best bet is to stay curious and keep ahead of the hype curve!